Machine Learning and ETW

In my previous ETW blog, I detailed how subscribing to ETW providers can be like opening the flood gates. Many providers can generate literally millions of events an hour. I don’t care how good of an analyst you are, you cannot efficiently analyze that amount of events.

So how do we efficiently deal with a large scale of events?

Machine Learning specializes in dealing with large scales of data that just isn’t feasible for humans. Being able to identify and learn patterns can allow for anomaly detection and detection of a potential compromise.

This project as a whole would be way too large for a single blog, so I’m initially going to cover the Microsoft-Windows-Kernel-Process provider, with the Image and Process keywords enabled.

Disclaimer

This is my first project using Machine Learning, and I’ve only been self teaching myself for about a month. If you’ve noticed that I’ve done something wrong, or you can see some improvements, please let me know! I’m keen to hear how I can better improve this project!

It’s also worth noting, this dataset is just from my personal computers. It’s only about four days worth of data. I’m not trying to prove that this is the latest and greatest, but rather show a demonstration on how ML could be applied to ETW events. In future, I’d like to have a much larger collection of data to test against.

Collecting the Data

Velociraptor EDR is my tool of choice for ETW collection. It provides an easy method for collecting ETW across a network through the watch_etw() plugin. To collect the the Microsoft-Windows-Kernel-Process provider, I used the following Client Event artifact:

name: Custom.ETW.Kernel_Process

description: |

Collects all image load/unload and process start/stop events.

# Can be CLIENT, CLIENT_EVENT, SERVER, SERVER_EVENT

type: CLIENT_EVENT

sources:

- precondition:

SELECT OS From info() where OS = 'windows'

query: |

SELECT * FROM watch_etw(

guid="{22FB2CD6-0E7B-422B-A0C7-2FAD1FD0E716}",

any=0x50

)Initially I’m only going to target Event ID 1: Process Start, while I am still getting my head around machine learning. In future, I’d like to also look at Event ID 5: Image Load due to the

Event ID 1: Process Start is presented in the following format:

I grabbed the following fields out of the data:

File Path

Time Stamp

Event ID 5: Image Load is presented in the following format:

A huge limiting factor for both of these events is that there is no parent process name. In future I may try to combat this by iterating again through the Process Start list, looking for the right PID, then assigning the closest created process id to the resulting child.

Velociraptor outputs the data into a JSON format, so I iterate through the fields that I want and ingest it into a Pandas Data Frame.

During ingestion, I separated:

File Path into the name and path.

Timestamp into Day of the Week and Hour of Day (UTC)

This made analysis on the individual aspects of the variables much easier.

The Known Bad

For this model, I’ve gone with a supervised approach, aiming to provide 50% Known Good and 50% Known Bad data to train the model.

For argument sake, I’m going to say that all the data ingested through Velociraptor is known good. I think that’s safe to say unless there’s an unknown compromise going on within my network. But considering the shear amount of Known Good data, how do get enough Known Bad data?

For Known Bad data, I used a mix of data sources which mostly boiled down to:

Previous investigations,

Investigation reports that I found in the Open Source, and

Intel Feeds

With these three data sets though, I still have nowhere near enough data to meet that 50/50 quota. To try and combat this, I wrote a script to take aspects of all the data that I collated and generate fake Known Bad events based on real Known Bad events. Without going on for too long, some of the aspects that I had to consider where:

Finding a way to represent commonly used variables by actors, but not forgetting more common or random aspects of their interactions.

Capturing start and finish times of known actor sessions, picking a session at random then generate an event timestamp within that event window.

Properly represent impersonation of legitimate processes and services. Have it seem identical to a known good, but with a variance, such as path.

Visualizing the Data

(Or as I like to call it, let’s make some pretty graphs!)

Before we can do anything with our data, we need to be able to understand it. Visualization can be a great way of showing what we’re trying to detect and understanding patterns within the data.

Active Hours

Probably one of the clear indicators of the difference between good and bad data is the active hours. Using the event hours of active users from the data, there is a clear correlation between good and bad data. as seen in the graph below:

User driven ‘good’ events skyrocket during the standard working hours in their respective time zone, with user events trickling through after closing time. ‘Bad’ events manage a more even line, but with most events happening after legitimate events have finished.

Note: Again, it’s worth noting this dataset is not complete, resulting in a more obvious difference. A complete dataset shows the same results, but with the user period being more evenly spread out.

File Name and Path

Looking at what executed and where, I get 194 unique process names and 103 unique file paths. But looking at the distribution of the data, the vast majority is spread across less than ten variables.

The top ten file names appear as bellow:

The top ten file paths appear as below:

I can conclude from looking at the data, that while the data is wide there are definitely commonalities and patters within the data.

Preparing Data

Now that I have the data ready, and I understand what the data looks like, it’s time to get the data into a machine learning appropriate format. Specifically, data is required to be ingested in an integer (or float) format, so I need to perform some conversion on the data.

During the creation of the feature columns, I handled the data in two types of ways:

Indicator (event hour, event dow) ,

Embedding (file name, file path)

I used an Indicator column for event hours and event day of weeks, due to there being a set amount of variables within them. While file name and file path could hypothetically have an infinite number of variables in a real world scenario, so Embedding seemed like a more efficient method to use. You can see the feature column setup here:

feature_columns = [] for i in ['file_name', 'file_path']: feature_columns.append( feature_column.embedding_column( feature_column.categorical_column_with_vocabulary_list( i, ds1[i].unique() ), dimension=8 ) ) for i in ['event_hour', 'event_dow']: feature_columns.append( feature_column.indicator_column( feature_column.categorical_column_with_vocabulary_list( i, ds1[i].unique() ) ) )

Creating and Training the Model

I went with a Neural Network for this projects model, with the main justification being that I’ve heard that’s the best model for big data. I’m still very new to this, so my model is based fairly heavily of Google’s TensorFlow training models.

Quick justification for each part:

Sigmoid Activation: To set an activation for classifying if something is suspicious or good.

L2: Regularizer to try and prevent overfitting, without setting weights to 0.

Dropout Layer: Drop 10% of data randomly, so we don’t overfit on training data as easily.

RMSprop: ¯\_(ツ)_/¯, I’m not entirely sure what the difference between this and adam is, requires more research.

Metrics: A list value can be passed in for dynamic requesting of metrics.

My model essentially looks like this:

Code is as follows:

def create_model(learning_rate, feature_layer, metrics): ''' Create the neural network model.''' # Create the model. model = tf.keras.models.Sequential() # Add the feature layer. model.add(feature_layer) # First hidden layer with 128 nodes. model.add(layers.Dense( units=128, activation='sigmoid', kernel_regularizer='l2', name='Hidden1' )) # Second hidden layer with 64 nodes. model.add(layers.Dense( units=64, activation='sigmoid', kernel_regularizer='l2', name='Hidden1' )) # Dropout layer - 10% model.add(layers.Dropout(0.1, name='Dropout')) # Output layer model.add(layers.Dense( units=1, name='Output' )) model.compile( optimizer=tf.keras.optimizer.RMSprop(lr=learning_rate), loss=tf.keras.losses.BinaryCrossentropy(), metrics=metrics )

Training the model really doesn’t require much explanation, I don’t do anything fancy. Worth noting that I return the whole history object so I can handle loss graphing dynamically.

def train_model( model, dataset, epochs, label_name, batch_size=None, shuffle=True ): '''Feed dataset and label, then train model. ''' features = {name:np.array(value) for name, value in dataset.items()} label = np.array(features.pop(label_name)) history = model.fit( x=features, y=label, batch_size=batch_size, epochs=epochs, shuffle=shuffle ) epochs = history.epoch hist = pd.DataFrame(history.history) return epochs, history

Results and Conclusion

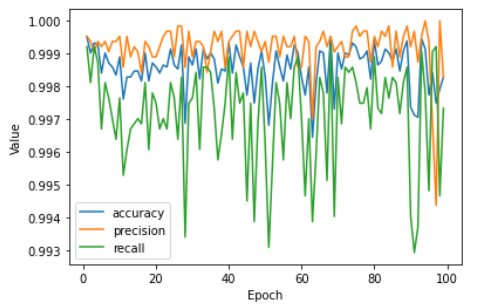

After running the training data through the model, I received quite possibly the weirdest graphs I seen through machine learning:

Evaluating the model against the test data returned a perfect score!

I think it’s very safe to say that something has gone wrong here. My guess is that I had no where near enough training data.

While I had a lot of ETW events to go through, the capture period for those events was very limited, just to the working hours of four different days. This would give the model some very easy wins, when finding “bad” indicators, especially around the ‘event hour’ and ‘event day of week’ fields.

While this initial project is a bit of a failure due to bad testing data, I’m going to continue running this ETW capture indefinitely, and it might be a great experiment to come back with a much larger dataset.

In regards to, ‘Is it possible to apply ML to ETW?’, I definitely think so. Although we do not have all the desired fields that we may want, with efficient capture and pre-processing of data, we may be able to build an effective ML model for multiple different detections.

Definitely a project to come back to in the future.

Wrap Up Comments

As I mentioned a few times, I’m very new to ML and this is my first real project within the Cyber Security space. If you have any feedback on things that I could have done different, I’d love to here it!

I just found a dataset of 120GB worth of malicious files, so I think I’ll try and apply some ML across those next!